Table Of Content

This method is used to reduce bias in quasi-experimental designs by matching participants in the intervention group with participants in the control group who have similar characteristics. This can help to reduce the impact of confounding variables that may affect the study’s results. The authors did not account for other factors that could have affected the difference between the treatment and comparison groups, such as race/ethnicity. Also, the groups were significantly different in age, but the authors did not control for the differences in the analyses. These preexisting differences between the groups—and not the financial education program—could explain the observed differences in outcomes. Therefore, the study is not eligible for a moderate causal evidence rating, the highest rating available for nonexperimental designs.

External validity

The pre-intervention outcome data is used to establish an underlying trend that is assumed to continue unchanged in the absence of the intervention under study (i.e., the counterfactual scenario). Any change in outcome level or trend from the counter-factual scenario in the post-intervention period is then attributed to the impact of the intervention. Individual-level DID analyses use participant-level panel data (i.e., information collected in a consistent manner over time for a defined cohort of individuals). The Familias en Accion program in Colombia was evaluated using a DID analysis, where eligible and ineligible administrative clusters were matched initially using propensity scores. The effect of the intervention was estimated as the difference between groups of clusters that were or were not eligible for the intervention, taking into account the propensity scores on which they were matched [25]. DID analysis is only a credible method when we expect unobservable factors which determine outcomes to affect both groups equally over time (the “common trends” assumption).

Internal validity

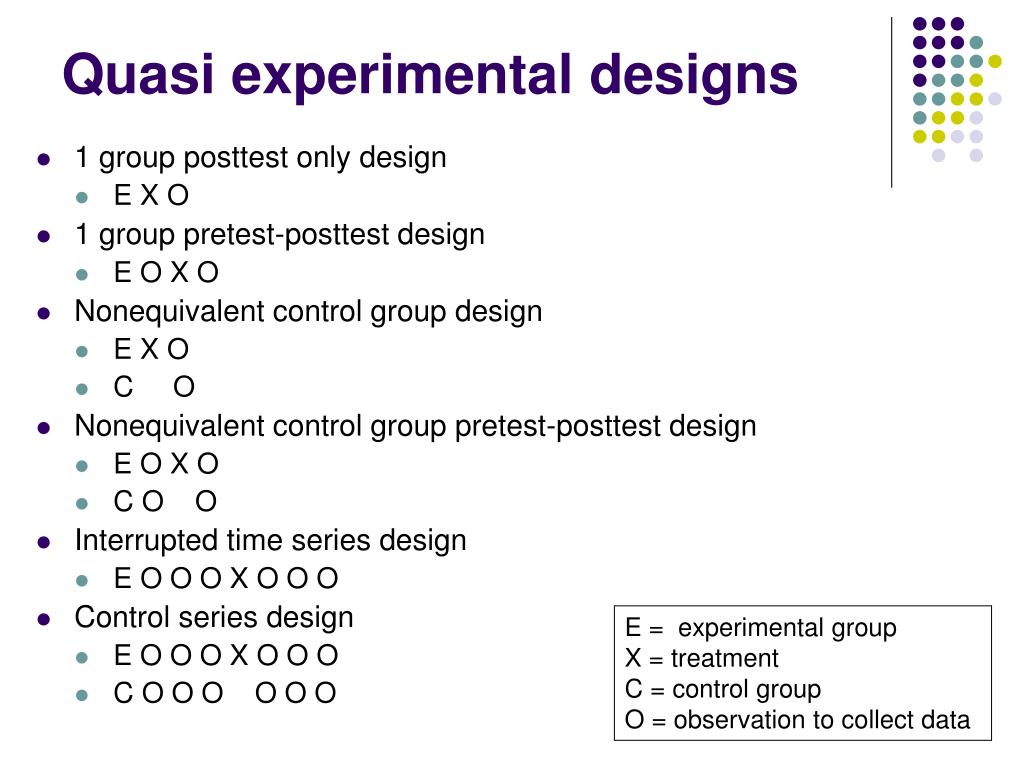

Unlike true experiments, quasi-experiment lack random assignment of participants to groups, making them more practical and ethical in certain situations. In this article, we will delve into the concept, applications, and advantages of quasi-experiments, shedding light on their relevance and significance in the scientific realm. In a pretest-posttest design, the dependent variable is measured once before the treatment is implemented and once after it is implemented.

What are the characteristics of quasi-experimental designs?

In our view, these labels obscure some of the detailed features of the study designs that affect the robustness of causal attribution. A type of quasi-experimental design that is generally better than either the nonequivalent groups design or the pretest-posttest design is one that combines elements of both. There is a treatment group that is given a pretest, receives a treatment, and then is given a posttest. But at the same time there is a control group that is given a pretest, does not receive the treatment, and then is given a posttest. The question, then, is not simply whether participants who receive the treatment improve but whether they improve more than participants who do not receive the treatment. A single pretest measurement is taken (O1), an intervention (X) is implemented, and a posttest measurement is taken (O2).

Quasi-experimental Designs That Use a Control Group but No Pretest

However, this type of analysis alone does not satisfy the criterion of enabling adjustment for unobservable sources of confounding because it cannot rule out confounding of health outcomes data by unmeasured confounding factors, even when participants are well characterized at baseline. Internal validity is defined as the degree to which observed changes in outcomes can be correctly inferred to be caused by an exposure or an intervention. Of course, researchers using a nonequivalent groups design can take steps to ensure that their groups are as similar as possible. In the present example, the researcher could try to select two classes at the same school, where the students in the two classes have similar scores on a standardized math test and the teachers are the same sex, are close in age, and have similar teaching styles. Taking such steps would increase the internal validity of the study because it would eliminate some of the most important confounding variables.

Collect data over time

As we continue to explore the boundaries of research methodology, platforms like Voxco provide essential tools and support for conducting and analyzing, driving advancements in knowledge and understanding. There is a new article in the field of hospital epidemiology which also highlights various features of what it terms as quasi-experimental designs [56]. There is some overlap with our checklist, but the list described also includes several study attributes intended to reduce the risk of bias, for example, blinding. By contrast, we consider that an assessment of the risk of bias in a study is essential and needs to be carried out as a separate task. The table also sets out our responses for the range of study designs as described in Box 1, Box 2. The response “possibly” (P) is prevalent in the table, even given the descriptions in these boxes.

Using hospital auxiliary worker and 24-h TB services as potential tools to overcome in-hospital TB delays: a quasi ... - Human Resources for Health

Using hospital auxiliary worker and 24-h TB services as potential tools to overcome in-hospital TB delays: a quasi ....

Posted: Fri, 03 Apr 2020 07:00:00 GMT [source]

If there had been only one measurement of absences before the treatment at Week 7 and one afterward at Week 8, then it would have looked as though the treatment were responsible for the reduction. The multiple measurements both before and after the treatment suggest that the reduction between Weeks 7 and 8 is nothing more than normal week-to-week variation. A nonequivalent groups design, then, is a between-subjects design in which participants have not been randomly assigned to conditions. With this study design, the researcher administers an intervention at a later time to a group that initially served as a nonintervention control.

Imagine, for example, a researcher who is interested in the effectiveness of an antidrug education program on elementary school students’ attitudes toward illegal drugs. The researcher could measure the attitudes of students at a particular elementary school during one week, implement the antidrug program during the next week, and finally, measure their attitudes again the following week. The pretest-posttest design is much like a within-subjects experiment in which each participant is tested first under the control condition and then under the treatment condition. It is unlike a within-subjects experiment, however, in that the order of conditions is not counterbalanced because it typically is not possible for a participant to be tested in the treatment condition first and then in an “untreated” control condition. Quasi-experimental research designs have gained significant recognition in the scientific community due to their unique ability to study cause-and-effect relationships in real-world settings.

Experiments and Quasi-Experiments

This design also aims to uncover cause-and-effect relationships between variables, but it assigns participants to groups using non-random criteria. Think of quasi-experimental design as a clever way scientists investigate cause and effect without the strict rules of a lab. Instead of assigning subjects randomly, researchers work with what they’ve got, making it more practical for real-world situations.

Health systems researchers and health economists use a wide range of “quasi-experimental” approaches to estimate causal effects of health care interventions. In the present context, they are considered to use rigorous designs and methods of analysis which can enable studies to adjust for unobservable sources of confounding [6] and are identical to the union of “strong” and “weak” quasi-experiments as defined by Rockers et al. [4]. Quasi-experimental research designs are research methodologies that resemble true experiments but lack the randomized assignment of participants to groups. In a true experiment, researchers randomly assign participants to either an experimental group or a control group, allowing for a comparison of the effects of an independent variable on the dependent variable.

Stepped wedge designs (SWDs) involve a sequential roll-out of an intervention to participants (individuals or clusters) over several distinct time periods (5, 7, 22, 24, 29, 30, 38). SWDs can include cohort designs (with the same individuals in each cluster in the pre and post intervention steps), and repeated cross-sectional designs (with different individuals in each cluster in the pre and post intervention steps) (7). In the SWD, there is a unidirectional, sequential roll- out of an intervention to clusters (or individuals) that occurs over different time periods. Initially all clusters (or individuals) are unexposed to the intervention, and then at regular intervals, selected clusters cross over (or ‘step’) into a time period where they receive the intervention [Figure 3 here]. All clusters receive the intervention by the last time interval (although not all individuals within clusters necessarily receive the intervention). Data is collected on all clusters such that they each contribute data during both control and intervention time periods.

Because productivity increased rather quickly after the shortening of the work shifts, and because it remained elevated for many months afterward, the researcher concluded that the shortening of the shifts caused the increase in productivity. Notice that the interrupted time-series design is like a pretest-posttest design in that it includes measurements of the dependent variable both before and after the treatment. It is unlike the pretest-posttest design, however, in that it includes multiple pretest and posttest measurements.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. There are many different kinds of quasi-experiments, but we will discuss just a few of the most common ones here. This design is employed when it is not ethical or logistically feasible to conduct randomized controlled trials.

No comments:

Post a Comment